The Cisco EtherChannel technology offers another method of scaling link bandwidth by bundling or aggregating multiple physical links into a single logical link.

2 to 8 physical Fast Ethernet (FE) or Gigabit Ethernet (GE) compatibly configured links can be aggregated as a single logical Fast EtherChannel (FEC) or Gigabit EtherChannel (GEC) link respectively.

EtherChannel provides a full-duplex bandwidth of up to 1600Mbps (8 x Fast Ethernet links) or 16Gbps (8 x Gigabit Ethernet links).

Higher-speed networking hardware that is early in the product life cycle is inevitably expensive.

EtherChannel provides incremental capacity upgrade to grow or expand the capacity between switches without having to continually purchase hardware for the next magnitude of bandwidth and throughput.

A single 200Mbps FE link can be incrementally expanded through a FEC up to 1600Mbps using 8 FE links.

The growth process can then begin again with a single 2Gbps GE link, which can be expanded through a GEC up to 16Gbps using 8 GE links; the process repeats again by moving to a single 20Gbps 10 Gigabit Ethernet link.

Link aggregation can be implemented when an intermediate link capacity is more appropriate.

Increasing link capacity in a factor of 10 may be overkill in some cases, and in fact it can’t really provide a 10:1 performance improvement; the bottleneck could be moved to another point in the end-to-end chain.

Link aggregation is often abbreviated as LAG.

Other terms include trunking, link bundling, NIC bonding, and NIC teaming.

A common terminology problem that is the source of much confusion is that the term “trunking” is often used by other vendors, eg: Sun Solaris, Brocade, etc, when referring to link aggregation configuration.

Cisco addresses a trunk as an L2 link that can carry frames that belongs to multiple VLANs.

Connecting switches using multiple links would create bridging loops.

Besides that, redundant links are unused due to the Spanning Tree Protocol loop prevention mechanism.

EtherChannel bundles multiple physical links into a single logical link and hence prevents the problem.

The logical link can act as either an L2 access or trunk link; or as an L3 routed port.

When several EtherChannels exist between a pair of switches, spanning tree will only place one of the bundles into forwarding state while blocking others to prevent bridging loops.

Blocking a redundant EtherChannel means blocking all the physical links belong to the EtherChannel.

Although an EtherChannel is treated as a single logical link, it actually does not truly provide a total bandwidth equal to the aggregate bandwidth of all the component physical links!

When it comes to EtherChannel, 1 + 1 does not equal to 2 or 1 + 1 + 1 + 1 does not equal to 4! :-)

Suppose that an FEC link is made up of 4 x 100Mbps full-duplex Fast Ethernet links.

Although the FEC link is possible to provide a total throughput of 800Mbps if all links are fully loaded, each conversation across the FEC will still be limited to 200Mbps!

The traffic is distributed across the individual links within the EtherChannel.

Each link operates at its inherent speed and transmits only the frames placed upon it by the load distribution algorithm. If a link within the bundle is favored by a particular algorithm, it would transmit a disproportionate amount of traffic – the load is not always being distributed equally among the individual links!

EtherChannel also increases link redundancy for higher availability in a way that if any one of the links within the bundled fails, the traffic sent through the link will be automatically moved to an adjacent link.

The loss of a member link within an aggregated EtherChannel link only reduces the available capacity and does not disrupt communications – fail soft; nor trigger topology change and spanning tree recalculation.

Failover occurs in less than a few milliseconds and therefore transparent to the end users.

As more links fail, more traffic is moved to further adjacent links.

As links are restored, the load is automatically redistributed among the active links.

Below lists the guidelines and restrictions when bundling physical ports into an EtherChannel:

- The members must have the same Ethernet speed, duplex, and MTU settings configured. [1]

- The members must be configured with the same storm control and flow control settings.

- The members must be belonged to the same VLAN in access mode.

- The members must be in trunking mode, have the same native VLAN, and carry the same set of allowed VLANs when the EtherChannel is used as a trunk.

- The members must be configured with identical spanning tree configuration. [2]

- The members and port channel can have different interface description and load interval settings.

- After an EtherChannel is configured, configuration changes applied upon the logical port channel interface affects the EtherChannel and the assigned physical ports; while configuration changes applied upon the physical ports affects only the physical ports where the configuration is applied. [3]

- The members cannot be a Switched Port Analyzer (SPAN) destination port.

[2] – Physical ports with different STP port path costs are still compatible to form an EtherChannel.

[3] – Except the load interval setting.

EtherChannel Traffic Distribution and Load Balancing

Traffic in an EtherChannel is distributed across the individual bundled links in a deterministic fashion; however, the load is not necessarily balanced equally across all the links.

Frames are distributed upon a specific link of an aggregated link as a result of a hashing algorithm, which can use the source MAC or IP address, the destination MAC or IP address, a combination of the source and destination MAC or IP addresses, and/or TCP/UDP port numbers.

The hashing algorithm computes a binary pattern that selects a link number in the bundle to carry a frame.

The hashing operation produces a number in the range of 0 – 7.

The same range is used regardless of the number of physical links in the EtherChannel.

The load share bits are assigned sequentially to each link in the bundle; and each physical link is assigned with one or more of these 8 values, depending upon the number of links in the EtherChannel.

Consequently, the load share bits for existing ports change as a member link joins or leaves the bundle.

EtherChannel Load Share Bits Assignment

On an EtherChannel with 8 physical links, each of the links is assigned with a single value.

On an EtherChannel with 6 physical links, 2 of the links are assigned with 2 load share bit values, while the remaining 4 links are each assigned with a single load share bit value.

By assuming a theoretical perfect distribution, 2 of the links will receive twice as much traffic as the other 4.

The only possible way to distribute traffic equally across all links in an EtherChannel to achieve perfect load distribution of traffic is to implement one with 2, 4, or 8 physical links (power-of-2).

Regardless of the load distribution algorithm, the method will still has produce a hash value of 0 – 7, which will be used to assign the link to carry a frame according to the table above.

If only one address or port number is hashed, a switch forwards each frame by using one or more low-order bits or least significant bits (LSBs) of the hash value as an index into the bundled links.

If 2 addresses or port numbers are hashed, a switch performs and exclusive-OR (XOR) operation on one or more low-order bits of the addresses or TCP/UDP port numbers as an index into the bundled links.

The XOR operation is performed independently upon each bit position in the address value.

If the 2 address bits have the same bit value, the XOR result is always 0;

If the 2 address bits differ, the XOR result is always 1.

Frames can then be distributed statistically among the links with the assumption that the MAC or IP addresses are distributed statistically throughout the network.

An EtherChannel consisting of 2 bundled links requires a 1-bit index, in which the index is either 0 or 1, and physical link 1 or 2 will be selected accordingly.

Either the last address bit or the XOR of the last address bits of the IP or MAC address can be used as the index.

Below shows the XOR operation upon a 2-link EtherChannel bundle using the source and destination addresses.

Binary Addresses

|

XOR Result and Link Number

|

| Address 1: …xxxxxxx0 Address 2: …xxxxxxx0 | …xxxxxxx0: Use link 1 |

| Address 1: …xxxxxxx0 Address 2: …xxxxxxx1 | …xxxxxxx1: Use link 2 |

| Address 1: …xxxxxxx1 Address 2: …xxxxxxx0 | …xxxxxxx1: Use link 2 |

| Address 1: …xxxxxxx1 Address 2: …xxxxxxx1 | …xxxxxxx0: Use link 1 |

The 4-link and 8-link EtherChannels use a hash of the rightmost least significant 2 and 3 bits respectively.

In a 4-link EtherChannel, the XOR is performed upon the lowest 2 bits of the address values and results in a 2-bit XOR value (each bit is computed separately) or a link number from 1 to 4.

In a 8-link EtherChannel, the XOR is performed upon the lowest 3 bits of the address values and results in a 3-bit XOR value (each bit is computed separately) or a link number from 1 to 8.

EtherChannel provides inherent protection against bridging loops.

When switch ports are bundled into an EtherChannel, inbound (received) broadcasts and multicasts are not sent back out any of the member ports in the bundle. Outbound broadcast and multicast frames are hashed and load balanced like unicast frames; the broadcast or multicast address is used in the hashing calculation to select an outbound link.

Below shows the XOR operation upon a 4-link EtherChannel bundle using the source and destination addresses.

Binary Addresses

|

XOR Result and Link Number

|

| Address 1: …xxxxxx00 Address 2: …xxxxxx00 | …xxxxxx00: Use link 1 |

| Address 1: …xxxxxx00 Address 2: …xxxxxx01 | …xxxxxx01: Use link 2 |

| Address 1: …xxxxxx00 Address 2: …xxxxxx10 | …xxxxxx10: Use link 3 |

| Address 1: …xxxxxx00 Address 2: …xxxxxx11 | …xxxxxx11: Use link 4 |

| Address 1: …xxxxxx01 Address 2: …xxxxxx00 | …xxxxxx01: Use link 2 |

| Address 1: …xxxxxx01 Address 2: …xxxxxx01 | …xxxxxx00: Use link 1 |

| Address 1: …xxxxxx01 Address 2: …xxxxxx10 | …xxxxxx11: Use link 4 |

| Address 1: …xxxxxx01 Address 2: …xxxxxx11 | …xxxxxx10: Use link 3 |

| Address 1: …xxxxxx10 Address 2: …xxxxxx00 | …xxxxxx10: Use link 3 |

| Address 1: …xxxxxx10 Address 2: …xxxxxx01 | …xxxxxx11: Use link 4 |

| Address 1: …xxxxxx10 Address 2: …xxxxxx10 | …xxxxxx00: Use link 1 |

| Address 1: …xxxxxx10 Address 2: …xxxxxx11 | …xxxxxx01: Use link 2 |

| Address 1: …xxxxxx11 Address 2: …xxxxxx00 | …xxxxxx11: Use link 4 |

| Address 1: …xxxxxx11 Address 2: …xxxxxx01 | …xxxxxx10: Use link 3 |

| Address 1: …xxxxxx11 Address 2: …xxxxxx10 | …xxxxxx01: Use link 2 |

| Address 1: …xxxxxx11 Address 2: …xxxxxx11 | …xxxxxx00: Use link 1 |

The conversation between 2 devices across an EtherChannel is always being sent through the same physical link as the 2 addresses stay the same. When a device communicates with several other devices, chances are that the addresses are distributed equally with 0s and 1s in the last bit – odd and even address values. Note that the load distribution is still proportional to the volume of traffic passing between pairs of hosts.

Suppose that there are 2 pairs of hosts communicating across a 2-link EtherChannel, and each pair of addresses is being computed by the hash algorithm and results in a unique link index. Frames for one pair of hosts always travel across one link in the channel, while frames for another pair travel across another link. Both links are being used as a result of the hash algorithm and the load is being distributed across the links in the channel.

Note: The file transfer data from a server to a client and the acknowledgements from the client to the server may be transmitted across different physical links – asymmetric.

However, if one pair of hosts has a much greater volume of traffic than the other pair, one link in the channel will be used much more than the other, which results in an imbalance load balancing.

Other hashing methods should be considered and used to solve such problems, eg: a method that uses both the source and destination addresses along with UDP or TCP port numbers can distribute traffic much differently – packets are being placed on links based on the applications used between the hosts.

Using the destination MAC or IP address as a component of a load balancing method ensures that packets with the same destination MAC address will always travel over the same physical link for in-order delivery. However, if the traffic is going only to a single MAC address and the load balancing method used is based upon the destination MAC address, the same physical link will always be chosen! Using source MAC or IP addresses might result in better load balancing.

The hashing operation is performed based upon L2 MAC addresses, L3 IP addresses, or L4 TCP / UDP port numbers; and whether from the source or destination or both source and destination addresses or ports.

The port-channel load-balance {method} global configuration command configures the frame distribution or load balancing method across all the EtherChannel physical links on a switch.

Note: The load balancing method is set on a global basis instead of a per-port basis. This restriction forces network architects to choose the most optimal method for all scenarios!

The table below lists the possible values for the method parameter along with the hashing operation.

method value

|

Hash Input

|

Hash Operation

|

Switch Model

|

| src-mac | Source MAC address | bits | 2950/2960/3550/3560/3750/4500/6500 |

| dst-mac | Destination MAC address | bits | 2950/2960/3550/3560/3750/4500/6500 |

| src-dst-mac | Source and destination MAC addresses | XOR | 2960/3560/3750/4500/6500 |

| src-ip | Source IP address | bits | 2960/3560/3750/4500/6500 |

| dst-ip | Destination IP address | bits | 2960/3560/3750/4500/6500 |

| src-dst-ip | Source and destination IP addresses | XOR | 2960/3560/3750/4500/6500 |

| src-port | Source TCP / UDP port number | bits | 4500/6500 |

| dst-port | Destination TCP / UDP port number | bits | 4500/6500 |

| src-dst-port | Source and destination TCP / UDP port numbers | XOR | 4500/6500 |

| src-mixed-ip-port | Source IP address and TCP / UDP port number | - | 6500 |

| dst-mixed-ip-port | Destination IP address and TCP / UDP port number | - | 6500 |

| src-dst-mixed-ip-port | Source and destination IP addresses and TCP / UDP port numbers | XOR | 6500 |

The default configuration for the Catalyst 2950, 2960, 3550, 3560, and 3750 is src-mac for L2 switching.

L3 switching on EtherChannel always uses the src-dst-ip method, even though it is not configurable.

Always select the load balancing method that provides the greatest distribution or variety when the channel links are being indexed. Also consider the type of addressing that is being used on the network. If it is mostly IP traffic, implement load balancing according to IP addresses or TCP / UDP port numbers.

For non-IP frames, eg: IPX and SNA frames, which are unable to meet the IP load balancing criteria, the switch automatically falls back upon the next lower method. Since MAC addresses always present in Ethernet networks, the switch distributes those frames based upon their MAC addresses.

Normally, the default load balancing method should result in a statistical distribution of frames.

However, we should determine whether the EtherChannel is imbalanced according to the traffic pattern.

Issue the show etherchannel port-channel EXEC command to verify the effectiveness of a load balancing method through the hexadecimal Load value for each link in the channel, which provides the traffic load of each link relative to the others.

C2950#sh etherchannel port-channel Channel-group listing: ---------------------- Group: 1 ---------- Port-channels in the group: --------------------------- Port-channel: Po1 ------------ Age of the Port-channel = 0d:00h:00m:46s Logical slot/port = 1/0 Number of ports = 2 GC = 0x00000000 HotStandBy port = null Port state = Port-channel Ag-Inuse Protocol = - Ports in the Port-channel: Index Load Port EC state No of bits ------+------+------+------------------+----------- 0 00 Fa0/1 On/FEC 0 0 00 Fa0/2 On/FEC 0 Time since last port bundled: 0d:00h:00m:05s Fa0/2 C2950#

Sample EtherChannel Load Balancing Scenario

Above is a relatively common topology that is appropriate to change the default load balancing method.

A group of users connected to SW1 reach a group of servers connected to SW2 through an EtherChannel.

The default load balancing method is based upon the source MAC address of each packet.

Even though MAC addresses are unique, the links often are not being used equally due to a common usage pattern in which one server often sends and/or receives more traffic than others.

The default load balancing method that is based upon the source MAC address would be fine for SW1; but it would be terrible on SW2, as the packets from the server that utilizes a great deal of bandwidth are being delivered across a particular physical link, which results in packet drop due to link saturation.

The most ideal configuration would be to have source MAC address load balancing on SW1, and destination MAC address load balancing on SW2. Unfortunately this solution will only work well if all servers and users are on different switch as shown in this sample topology.

Note: It is not necessary to use the same distribution algorithm in both directions of the aggregation link. It is common to use source MAC address forwarding on a switch connected to a router, and destination MAC address forwarding on the router.

Single Server to Single NAS

The figure above shows an interesting link aggregation problem.

A server and a NAS device connect to a switch via an EtherChannel.

All of the filesystems for the server are mounted on the NAS device, and the server is heavily used.

The bandwidth required between the server and the NAS device is in excess of 2Gbps.

The source MAC address and destination MAC address cannot be used for load balancing as there is only one address in each case. A combination of source and destination MAC addresses, or the source and/or destination IP addresses cannot be used as well for the same reason. The source or destination port numbers cannot be used as well as they don’t change once they are negotiated by the application. One possibility is to change the server and/or NAS device so that each link has its own MAC address, provided that the drivers support this. However the packets will still be sourced from and destined for only one of those addresses.

Below lists the possible solutions for this problem:

- Manual load balancing – split the bundle back into 4 x 1Gbps links, each with its own IP network, and mount different filesystems via different IP addresses. This approach is rather complicated.

- Faster links – employ a faster physical link, eg: 10Gbps Ethernet, to replace the aggregated link.

These 48-port modules have 6 groups of oversubscribed ports with 8 ports each.

| Group 1 | Ports 1, 2, 3, 4, 5, 6, 7, 8. |

| Group 2 | Ports 9, 10, 11, 12, 13, 14, 15, 16. |

| Group 3 | Ports 17, 18, 19, 20, 21, 22, 23, 24. |

| Group 4 | Ports 25, 26, 27, 28, 29, 30, 31, 32. |

| Group 5 | Ports 33, 34, 35, 36, 37, 38, 39, 40. |

| Group 6 | Ports 41, 42, 43, 44, 45, 46, 47, 48. |

EtherChannel Negotiation Protocols

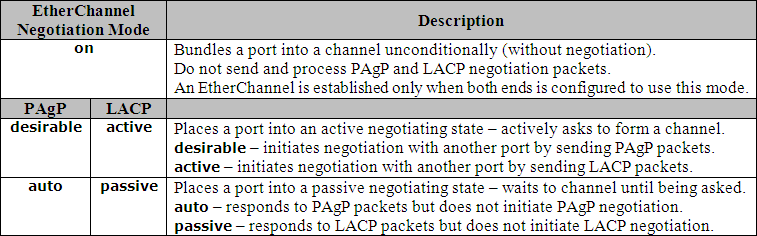

EtherChannel dynamic negotiation allows directly connected devices, eg: switches, routers, servers, etc, with ports of similar characteristics to negotiate and aggregate links as EtherChannels between them. Below lists the 2 protocols that are available for dynamically negotiate and control EtherChannels:

- Port Aggregation Protocol (PAgP), a Cisco-proprietary protocol.

- Link Aggregation Control Protocol (LACP), a standards-based protocol defined in IEEE 802.3ad.

Ports configured to use PAgP cannot form EtherChannels with ports configured to use LACP, and vice versa.

Additionally, PAgP and LACP do not interoperate with manually configured ports (mode on).

Caution: Mixing manual mode (on) with PAgP and LACP modes (desirable / auto and active / passive), or with ports without EtherChannel configuration can result in bridging loop and broadcast storm!

Note: PAgP and LACP EtherChannel groups can coexist on the same switch.

Below lists the advantages of dynamic link aggregation over static link aggregation configuration:

- Proper failover upon a link failure when there is a media converter between the devices in which the peer will not detect the link down. With static link aggregation, the peer would continue sending frames through the broken link and causing packet loss.

- The device can confirm that the configuration at the other end is able to handle link aggregation. With static link aggregation, a cabling or configuration mistake could go undetected and cause undesirable network behavior.

Cisco developed the Port Aggregation Protocol (PAgP) to provide automatic EtherChannel negotiation and configuration between Catalyst switches.

PAgP packets are exchanged between switches over aggregation-capable ports.

Neighbors are identified and the capabilities are learned and compared upon local switch capabilities.

Ports that have the same neighbor device ID and port group capability are bundled together as bidirectional point-to-point EtherChannel link.

PAgP forms an EtherChannel only upon ports that are configured for either same VLANs or trunking.

PAgP can be configured in active mode – desirable, which places a switch port into the active negotiating state by sending PAgP packets to actively negotiates with the far end switch to establish an EtherChannel; or in passive mode – auto, which places a switch port into the passive negotiating state that only initiates PAgP negotiation to establish an EtherChannel upon receiving PAgP packets from the far end switch. A port group in desirable mode forms a channel with another port group in desirable or auto mode; while a port group in auto mode forms a channel only with another port group in desirable mode.

Below shows how to configure an EtherChannel by assigning switch ports for PAgP negotiation:

Switch(config)#int [range] type mod/num [- type mod/num] [, type mod/num] Switch(config-if)#switchport trunk encapsulation dot1q Switch(config-if)#switchport mode trunk Switch(config-if)#[channel-protocol pagp] (optional) Switch(config-if)#channel-group port-group-num mode {auto | desirable} [non-silent]

PAgP and LACP are available as a channel negotiation protocol for all Cisco IOS-based Catalyst switches; while LACP is the only available channel negotiation protocol for all Cisco NX-OS-based Nexus switches. Each interface that will be included in a single EtherChannel bundle must be configured and assigned to the same unique channel group number. The channel group number ranges differ among switch models.

The channel-protocol {lacp | pagp} interface subcommand restricts an EtherChannel to LACP or PAgP.

It is optional, as the channel-group interface subcommand can also set the mode for an EtherChannel.

Once the EtherChannel negotiation protocol is set by either one of the commands, the setting cannot be overridden by the other command – issuing the channel-protocol [pagp | lacp] interface subcommand after the channel-group {port-group-num} mode on interface subcommand will be rejected.

The channel-group {port-group-num} mode {on | desirable [non-silent] | auto [non-silent] | active | passive} interface subcommand assign an Ethernet switch port to an EtherChannel group and enable an EtherChannel mode.

PAgP operates in the silent submode by default.

When the optional non-silent keyword is not being specified along with the auto or desirable mode, the silent operation is assumed. The silent keyword is not shown in the Cisco IOS configuration but is shown in the CatOS configuration as below.

set port channel 1/1-2 mode auto silent

Below describes the operation and usage of the PAgP silent and non-silent submodes:

| silent | Bundles ports into a channel even if the device at the other end never transmits PAgP packets – a silent non-PAgP-capable device, eg: a file server or packet analyzer. This idea seems against the purpose of using channel negotiation protocol, in which both ends are supposed to negotiate a channel – how can both ends negotiate something without receiving anything? The key phrase here is "if the other end is silent". The silent submode listens for any PAgP packets from the remote end during the channel negotiation process. If no PAgP packet is received, silent submode assumes that a channel should be established anyway, and do not expect to receive any more PAgP packets from the remote end. |

| non-silent | Requires each port to receive PAgP packets before bundling them into a channel. Normally being used when connecting to a PAgP-capable neighboring switch and expect PAgP packets from the other end, in which the switches should actively negotiate a channel and should not wait to listen for silent partners. |

The default silent submode works for both PAgP and non-PAgP neighbor, why should we bother to use the non-silent submode? :-) UPDATE: The non-silent is meant for legacy Catalyst 5000 fiber-based Fast Ethernet and Gigabit Ethernet interfaces to protect against unidirectional link failures. IOS-based Catalyst switches employ UDLD to detect such failures much faster than the PAgP non-silent operation.

Note: The author was not managed to establish a channel to a packet sniffing workstation successfully using any combination of the PAgP modes and submodes configuration on the switch port end.

The transition period from the "physical link up" state (LINK-3-UPDOWN) to the "line protocol up" state (LINEPROTO-5-UPDOWN) for switch ports without any channel protocol configuration is 1 second; whereas the same transition period for switch ports configured with PAgP requires 5 to 15 seconds! When the switch ports of both end of a channel is configured with the PAgP auto mode and the default silent submode, all interfaces wait to be asked to form a channel, and listen for incoming PAgP packets before accepting silent channel partners. The delay for the link transition is approximately 15 seconds. Note that the links between the switches will eventually come up, but not as a channel, because neither port will initiate negotiation (adhering to the PAgP desirable and auto negotiation rule).

The spanning tree protocol will perform the necessary blocking to prevent bridging loop.

There may be an additional of 30 seconds delay before actual traffic can pass through the links due to the transitions through the spanning tree listening and learning stages.

Link Aggregation Control Protocol (LACP)

LACP is standards-based link aggregation protocol that performs a similar function as PAgP.

LACP is defined in IEEE 802.3ad (also known as IEEE 802.3 Clause 43 – Link Aggregation).

LACP was based on a proposal from Cisco derived from the proprietary PAgP.

| Cisco licensed the Fast EtherChannel technology (and the link aggregation control protocol – PAgP) to a few equipment manufacturers, which had become the de factor standard for link aggregation until the development of the IEEE 802.3ad LACP. Cisco was an active participant in and contributor to the development of the IEEE-standard LACP, which is expected to be widely deployed in the future. The current Cisco data center switching portfolio of the Nexus family switches support only LACP. |

LACP only supports full-duplex links; while both PAgP and mode on support half-duplex links.

Half-duplex links in an LACP EtherChannel are placed in the suspended state.

LACP packets (LACPDUs) are exchanged between switches over aggregation-capable ports – switch ports configured in active or passive channel operation modes.

LACP is a Slow Protocol, which does not demand high performance, and hence can be implemented in an inexpensive microprocessor. Slow Protocols must limit their transmissions to no more than 5 frames per second (and hence the name). Note: The Wireshark display filter for LACP is "slow".

As with PAgP, neighbors are detected and identified through the negotiation between the remote and local switch and port group capabilities.

LACP assigns roles upon channel endpoints – Actor and Partner.

The actor and partner are the local and remote interfaces in an LACP exchange respectively.

Both devices are Actors and both are Partners; the terms are meaningful relative only to each device entity.

The Partner information in the LACP message received from the Partner constitutes the Partner’s view of the Actor. LACP can compare the Partner’s Partner information with its own states to make sure that the Partner has received the Actor’s information correctly.

LACPDUs are exchanged periodically between the actor and partner at either slow (every 30 seconds) or fast (every second) intervals. The interval rates can be different between the channel endpoints. The transmitter honors the rate requested by the receiver – the Actor sets its transmission frequency as requested by its Partner. As the

Partner will timeout if LACP messages are not received on time, the Actor must ensure that LACP messages are sent as often as the Partner requested.

The lacp rate {normal | fast} interface subcommand [1] set the ingress rate of LACPDUs for an interface.

The duration of the LACP timeout value is 3 times the LACP rate interval.

Shorter timeout period provides more rapid detection of link configuration changes and convergence. [1] – Only available on the Catalyst 6500 Series, Nexus 7000 Series, and Nexus 5000 Series switches.

LACP exchanges state information independently on each aggregatable link.

Independent LACP messages are sent on each link to provide link-specific information to the partner.

Aggregations are maintained in the steady state through the timely exchange of state information.

The appropriate actions should be taken upon the system states. Should the configuration change or fail, the partner will timeout and take the appropriate action based on the new state.

The LACP System Priority (1 to 65’535) of a switch is configured automatically with the default value of 32’768 or can be manually defined using the lacp system-priority {lacp-sys-priority} global command.

The switch with the lower System Identifier (2-byte System Priority + 6-byte switch MAC address) will make decisions about which links to be actively participating in the EtherChannel at a given time. Therefore the switch assigned with a lower System Priority will become the decision maker.

When both switches have the same default System Priority (32’768), the one with the lower MAC address will become the decision maker.

LACP System Identifier follows the same style and usage as the Spanning Tree Protocol Bridge Identifier.

The show lacp sys-id EXEC command shows the LACP System Identifier of a switch and verifies the LACP System Priority configuration.

C6500>sh lacp sys-id

32768,000d.661f.a800

C6500>

The System Identifier also ensures that aggregations are established only upon links that interconnect the same pair of devices. An Actor will consider aggregating links only when received LACP information which indicates that the same Partner for all links in the potential aggregation.

A link is selected to become active according to its Port Identifier (2-byte Port Priority + 2-byte Port Number), in which the lower value indicates the higher priority to be selected as active links.

A set of up to 16 physical links can be defined for an LACP channel.

Through LACP, up to 8 of those physical links with the lowest port priorities will be selected as active aggregated links at any given time. The other links are placed in the hot-standby state and will be bundled into the EtherChannel should any of the active links goes down.

When all the ports are default to 32’768, the links with the lowest port numbers will be selected.

Note: LACP hot-standby interfaces should be configured with a higher LACP port priority value.

As with PAgP, LACP can be configured in active mode – active, which places a switch port into the active negotiating state by sending PAgP packets to actively negotiates with the far end switch to establish an EtherChannel; or in passive mode – passive, which places a switch port into the passive negotiating state that only initiates LACP negotiation to establish an EtherChannel upon receiving LACP packets from the far end switch.

LACP automatically assigns an administrative key value equal to the channel group identification number of each port configured to use LACP. The administrative key defines the ability of a port to be aggregated with other ports, normally based upon the link characteristics and hardware limitations.

For the purpose of identifying aggregation candidates, a set of links that assigned with the same key can be aggregated by a partner system; links with different key values should never be aggregated together.

Note: Links belong to different port channels are assigned with different administrative keys.

Below shows how to configure an EtherChannel by assigning switch ports for LACP negotiation:

Switch(config)#[lacp system-priority {lacp-sys-priority}] (optional) Switch(config)#int [range] type mod/num [- type mod/num] [, type mod/num] Switch(config-if)#switchport trunk encapsulation dot1q Switch(config-if)#switchport mode trunk Switch(config-if)#[channel-protocol lacp] (optional) Switch(config-if)#channel-group port-group-num mode {active | passive} Switch(config-if)#[lacp port-priority {lacp-port-priority}] (optional)

Below shows the configuration for negotiating an EtherChannel through Fa0/1 – 8 and Fa0/11 – 18. The switch should actively negotiate the channel and the decision maker upon the channel operation.

Interfaces Fa0/11 – 18 which are left to their default port priorities of 32’768, are higher than the port priorities of interfaces Fa0/1 – 8 > 100, will be held as standby links to replace failed links in the channel.

C3560(config)#lacp system-priority 100 C3560(config)#int range fa0/1 - 8 C3560(config-if-range)#switchport trunk encapsulation dot1q C3560(config-if-range)#switchport mode trunk C3560(config-if-range)#channel-protocol lacp C3560(config-if-range)#channel-group 1 mode active C3560(config-if-range)#lacp port-priority 100 C3560(config-if-range)#exit C3560(config)# C3560(config)#int range fa0/11 - 18 C3560(config-if-range)#switchport trunk encapsulation dot1q C3560(config-if-range)#switchport mode trunk C3560(config-if-range)#channel-protocol lacp C3560(config-if-range)#channel-group 1 mode active C3560(config-if-range)#end C3560# C3560#sh etherchannel summary Flags: D - down P - bundled in port-channel I - stand-alone s - suspended H - Hot-standby (LACP only) --- output omitted --- Number of channel-groups in use: 1 Number of aggregators: 1 Group Port-channel Protocol Ports ------+-------------+-----------+----------------------------------------------- 1 Po1(SU) LACP Fa0/1(P) Fa0/2(P) Fa0/3(P) Fa0/4(P) Fa0/5(P) Fa0/6(P) Fa0/7(P) Fa0/8(P) Fa0/11(H) Fa0/12(H) Fa0/13(H) Fa0/14(H) Fa0/15(H) Fa0/16(H) Fa0/17(H) Fa0/18(H) C3560# C3560#sh lacp internal Flags: S - Device is requesting Slow LACPDUs F - Device is requesting Fast LACPDUs A - Device is in Active mode P - Device is in Passive mode Channel group 1 LACP port Admin Oper Port Port Port Flags State Priority Key Key Number State Fa0/1 SA bndl 100 0x1 0x1 0x104 0x3D Fa0/2 SA bndl 100 0x1 0x1 0x105 0x3D Fa0/3 SA bndl 100 0x1 0x1 0x106 0x3D Fa0/4 SA bndl 100 0x1 0x1 0x107 0x3D Fa0/5 SA bndl 100 0x1 0x1 0x108 0x3D Fa0/6 SA bndl 100 0x1 0x1 0x109 0x3D Fa0/7 SA bndl 100 0x1 0x1 0x10A 0x3D Fa0/8 SA bndl 100 0x1 0x1 0x10B 0x3D Fa0/11 SA hot-sby 32768 0x1 0x1 0x10E 0x5 Fa0/12 SA hot-sby 32768 0x1 0x1 0x10F 0x5 Fa0/13 SA hot-sby 32768 0x1 0x1 0x110 0x5 Fa0/14 SA hot-sby 32768 0x1 0x1 0x111 0x5 Fa0/15 SA hot-sby 32768 0x1 0x1 0x112 0x5 Fa0/16 SA hot-sby 32768 0x1 0x1 0x113 0x5 Fa0/17 SA hot-sby 32768 0x1 0x1 0x114 0x5 Fa0/18 SA hot-sby 32768 0x1 0x1 0x115 0x5 C3560#

Troubleshooting EtherChannel

Caution: Mixing manual mode (on) with PAgP and LACP modes (desirable / auto and active / passive), or with ports without EtherChannel configuration can result in bridging loop and broadcast storm!

EtherChannel Misconfiguration Scenarios

The scenarios in the figure above illustrate how bridging loop can occur in EtherChannel misconfiguration.

Bridging loop occurs in Scenario #1 as all interfaces are placed into the spanning tree forwarding states.

C2950#sh spanning-tree --- output omitted --- Interface Role Sts Cost Prio.Nbr Type ---------------- ---- --- --------- -------- -------------------------------- Po1 Desg FWD 12 128.65 P2p C2950# ================================================================================ C3560#sh spanning-tree --- output omitted --- Interface Role Sts Cost Prio.Nbr Type ------------------- ---- --- --------- -------- -------------------------------- Fa0/1 Root FWD 19 128.3 P2p Fa0/2 Desg FWD 19 128.4 P2p C3560# 00:01:30: %SW_MATM-4-MACFLAP_NOTIF: Host 0011.2029.6802 in vlan 1 is flapping between port Fa0/2 and port Fa0/1 00:02:10: %SW_MATM-4-MACFLAP_NOTIF: Host 0011.2029.6802 in vlan 1 is flapping between port Fa0/2 and port Fa0/1 00:02:31: %SW_MATM-4-MACFLAP_NOTIF: Host 0011.2029.6802 in vlan 1 is flapping between port Fa0/2 and port Fa0/1 C3560#

In Scenario #2, C2950 receives BPDUs upon both its member interfaces, and therefore detects the misconfiguration and places its interfaces into err-disabled state to prevent bridging loop from occurring.

C3560#sh spanning-tree --- output omitted --- Interface Role Sts Cost Prio.Nbr Type ------------------- ---- --- --------- -------- -------------------------------- Fa0/1 Desg FWD 19 128.3 P2p Fa0/2 Desg FWD 19 128.4 P2p C3560# ================================================================================ C2950#sh spanning-tree --- output omitted --- Interface Role Sts Cost Prio.Nbr Type ---------------- ---- --- --------- -------- -------------------------------- Po1 Root FWD 12 128.65 P2p C2950# 00:01:34: %PM-4-ERR_DISABLE: channel-misconfig error detected on Po1, putting Fa0/1 in err-disable state 00:01:34: %PM-4-ERR_DISABLE: channel-misconfig error detected on Po1, putting Fa0/2 in err-disable state C2950#

The show etherchannel [channel-group-num] summary EXEC command displays the overall status of a particular channel or all the channels configured on a switch.

It shows the operational status of each channel, and each port associated with the channel along with the flag(s) that indicates the state of the port.

Note: If a channel-group number is not specified, all channel groups are displayed.

C3750>sh etherchannel summary

Flags: D - down P - in port-channel

I - stand-alone s - suspended

H - Hot-standby (LACP only)

R - Layer3 S - Layer2

U - in use f - failed to allocate aggregator

u - unsuitable for bundling

w - waiting to be aggregated

d - default port

Number of channel-groups in use: 1

Number of aggregators: 1

Group Port-channel Protocol Ports

------+-------------+-----------+-----------------------------------------------

1 Po1(SU) - Gi1/0/1(P) Gi2/0/1(D)

C3750>

The show etherchannel load-balance EXEC command displays the load distribution algorithm.

C3750#sh etherchannel load-balance EtherChannel Load-Balancing Configuration: src-mac EtherChannel Load-Balancing Addresses Used Per-Protocol: Non-IP: Source MAC address IPv4: Source MAC address IPv6: Source MAC address C3750# C3750#conf t Enter configuration commands, one per line. End with CNTL/Z. C3750(config)#port-channel load-balance ? dst-ip Dst IP Addr dst-mac Dst Mac Addr src-dst-ip Src XOR Dst IP Addr src-dst-mac Src XOR Dst Mac Addr src-ip Src IP Addr src-mac Src Mac Addr C3750(config)#

The show etherchannel port EXEC command displays the current status for each member port.

Below shows the command output excerpt from an PAgP EtherChannel:

SW1#sh etherchannel port Channel-group listing: ---------------------- Group: 1 ---------- Ports in the group: ------------------- Port: Fa0/1 ------------ Port state = Up Mstr In-Bndl Channel group = 1 Mode = Desirable-Sl Gcchange = 0 Port-channel = Po1 GC = 0x00010001 Pseudo port-channel = Po1 Port index = 0 Load = 0x00 Protocol = PAgP Flags: S - Device is sending Slow hello. C - Device is in Consistent state. A - Device is in Auto mode. P - Device learns on physical port. d - PAgP is down. Timers: H - Hello timer is running. Q - Quit timer is running. S - Switching timer is running. I - Interface timer is running. Local information: Hello Partner PAgP Learning Group Port Flags State Timers Interval Count Priority Method Ifindex Fa0/1 SC U6/S7 H 30s 1 128 Any 29 Partner's information: Partner Partner Partner Partner Group Port Name Device ID Port Age Flags Cap. Fa0/1 SW2 0023.04a3.5c80 Fa0/1 26s SAC 10001 Age of the port in the current state: 0d:00h:00m:56s SW1#

Below shows the command output excerpt from an LACP EtherChannel:

SW1#sh etherchannel port Channel-group listing: ---------------------- Group: 1 ---------- Ports in the group: ------------------- Port: Fa0/1 ------------ Port state = Up Mstr In-Bndl Channel group = 1 Mode = Active Gcchange = - Port-channel = Po1 GC = - Pseudo port-channel = Po1 Port index = 0 Load = 0x00 Protocol = LACP Flags: S - Device is sending Slow LACPDUs F - Device is sending fast LACPDUs. A - Device is in active mode. P - Device is in passive mode. Local information: LACP port Admin Oper Port Port Port Flags State Priority Key Key Number State Fa0/1 SA bndl 32768 0x1 0x1 0x1 0x3D Partner's information: LACP port Oper Port Port Port Flags Priority Dev ID Age Key Number State Fa0/1 SP 32768 0023.04a3.5c80 0s 0x1 0x104 0x3C Age of the port in the current state: 0d:00h:00m:54s SW1#

The EtherChannel member ports must be configured with the same settings, eg: speed, duplex, MTU, mode of operation (access or trunk), native VLAN, same set of allowed VLANs, etc, in order to be compatible and bundled into an EtherChannel.

Below list some error messages due to member port configuration inconsistencies.

The cause of the error is shown.

10:21:04.795: %EC-SP-5-CANNOT_BUNDLE2: Gi1/2 is not compatible with Gi1/1 and will be suspended (MTU of Gi1/2 is 9216, Gi1/1 is 1500) -------------------------------------------------------------------------------- 00:24:28.543: %EC-5-CANNOT_BUNDLE2: Fa1/0/2 is not compatible with Fa1/0/1 and will be suspended (duplex of Fa1/0/2 is half, Fa1/0/1 is full) -------------------------------------------------------------------------------- 00:29:12.917: %EC-5-CANNOT_BUNDLE2: Fa1/0/2 is not compatible with Fa1/0/1 and will be suspended (Broadcast suppression: Level of Fa1/0/2 is 10.00%, 10.00%. Level of Fa1/0/1 is not configured.) -------------------------------------------------------------------------------- 00:34:33.521: %EC-5-CANNOT_BUNDLE2: Fa1/0/2 is not compatible with Fa1/0/1 and will be suspended (flow control receive of Fa1/0/2 is on, Fa1/0/1 is off) -------------------------------------------------------------------------------- 00:39:27.734: %EC-5-CANNOT_BUNDLE2: Fa1/0/2 is not compatible with Fa1/0/1 and will be suspended (access vlan of Fa1/0/2 is 2, Fa1/0/1 is 1) -------------------------------------------------------------------------------- 00:42:23.451: %EC-5-CANNOT_BUNDLE2: Fa1/0/2 is not compatible with Fa1/0/1 and will be suspended (native vlan of Fa1/0/2 is 2, Fa1/0/1 id 1) -------------------------------------------------------------------------------- 00:54:05.535: %EC-5-CANNOT_BUNDLE2: Fa1/0/2 is not compatible with Fa1/0/1 and will be suspended (vlan mask is different)

No comments:

Post a Comment